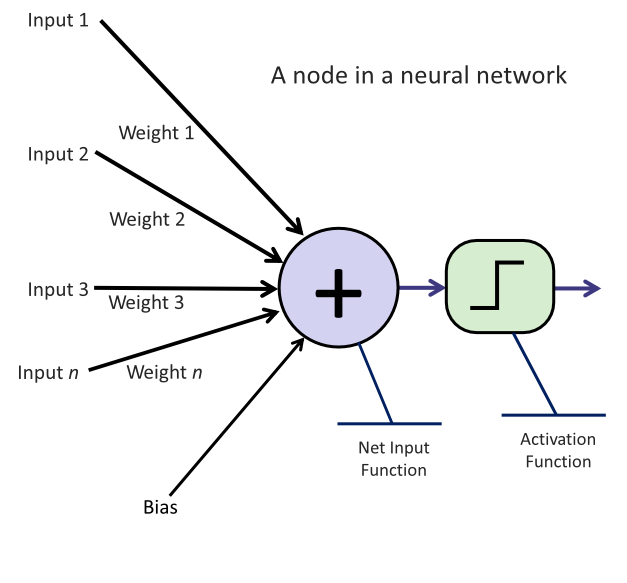

Neurons, often called nodes, are a neural network’s fundamental building blocks. Drawing inspiration from biological neurons in the human brain, these computational units are designed to process information in a way that mimics how our brains function.

At its core, a neuron consists of:

- Inputs: Each neuron receives one or more inputs. These can be raw data values (in the input layer) or the outputs from neurons in previous layers.

- Weights: Associated with each input is a weight. Weights are crucial numerical parameters in neural networks and determine the importance or influence of a particular input on the neuron’s output. They are adjusted during the training process (specifically, during back-propagation) to minimize the error in the network’s predictions. Back-propagation and training in general are explained in more detail later in this module. The inputs and weights are multiplied and added together.

- Bias: This numerical parameter is added to the sum of all the input by weight products. The bias allows the neuron to shift its sum, enabling more complex representations. It is like an intercept in linear regression.

- Activation Function: After summing the weighted inputs and the bias, the result is passed through an activation function. This function can introduce non-linearity to the model, allowing the network to capture complex relationships in the data.